Project information

- Category: Deep Learning, Statistics

- Role: Solo Researcher

- Project date: March - May, 2023

- Project URL: Paper

Addressing the Reproducibility Crisis in Deep Learning Research with Statistical Testing

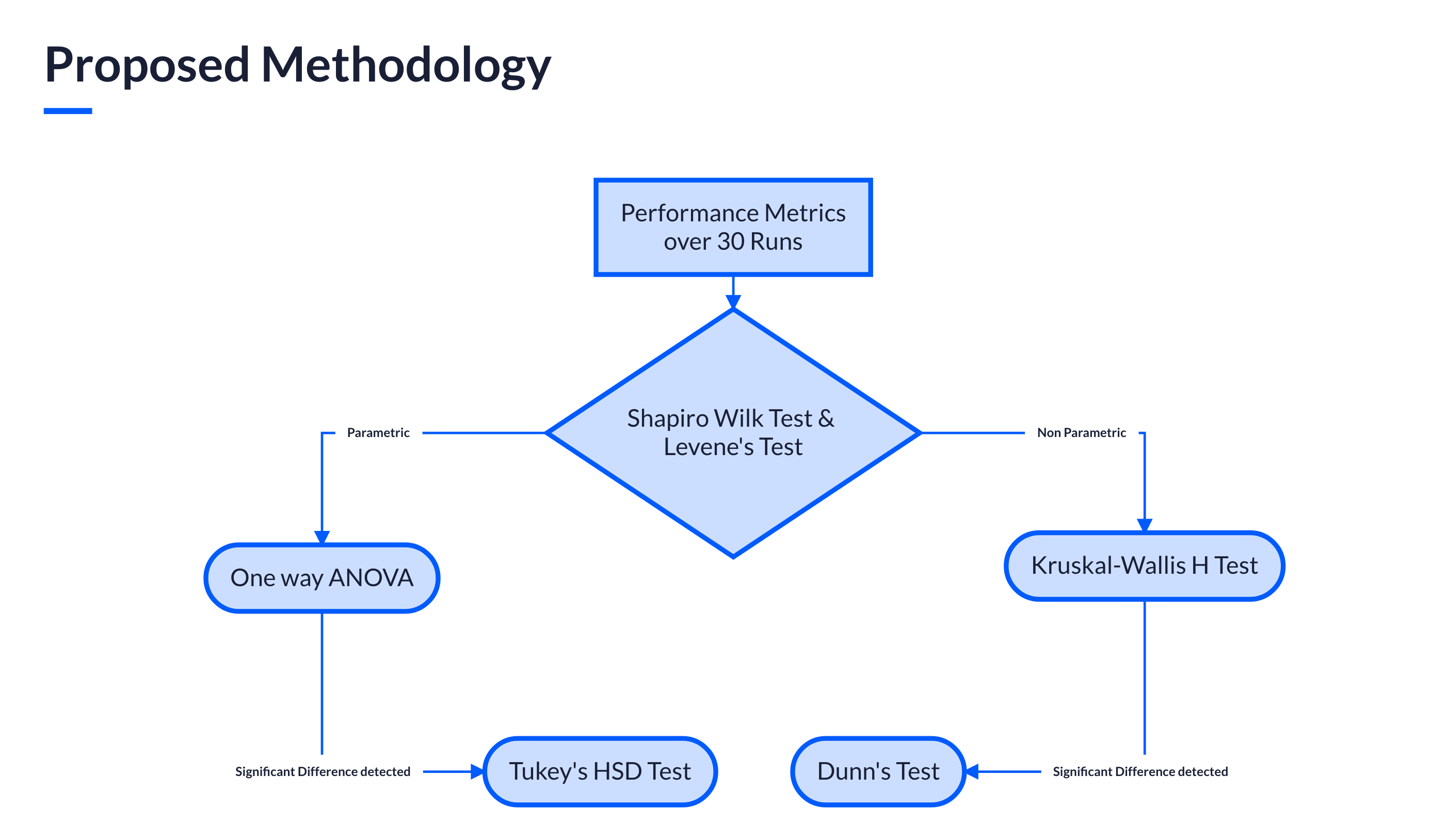

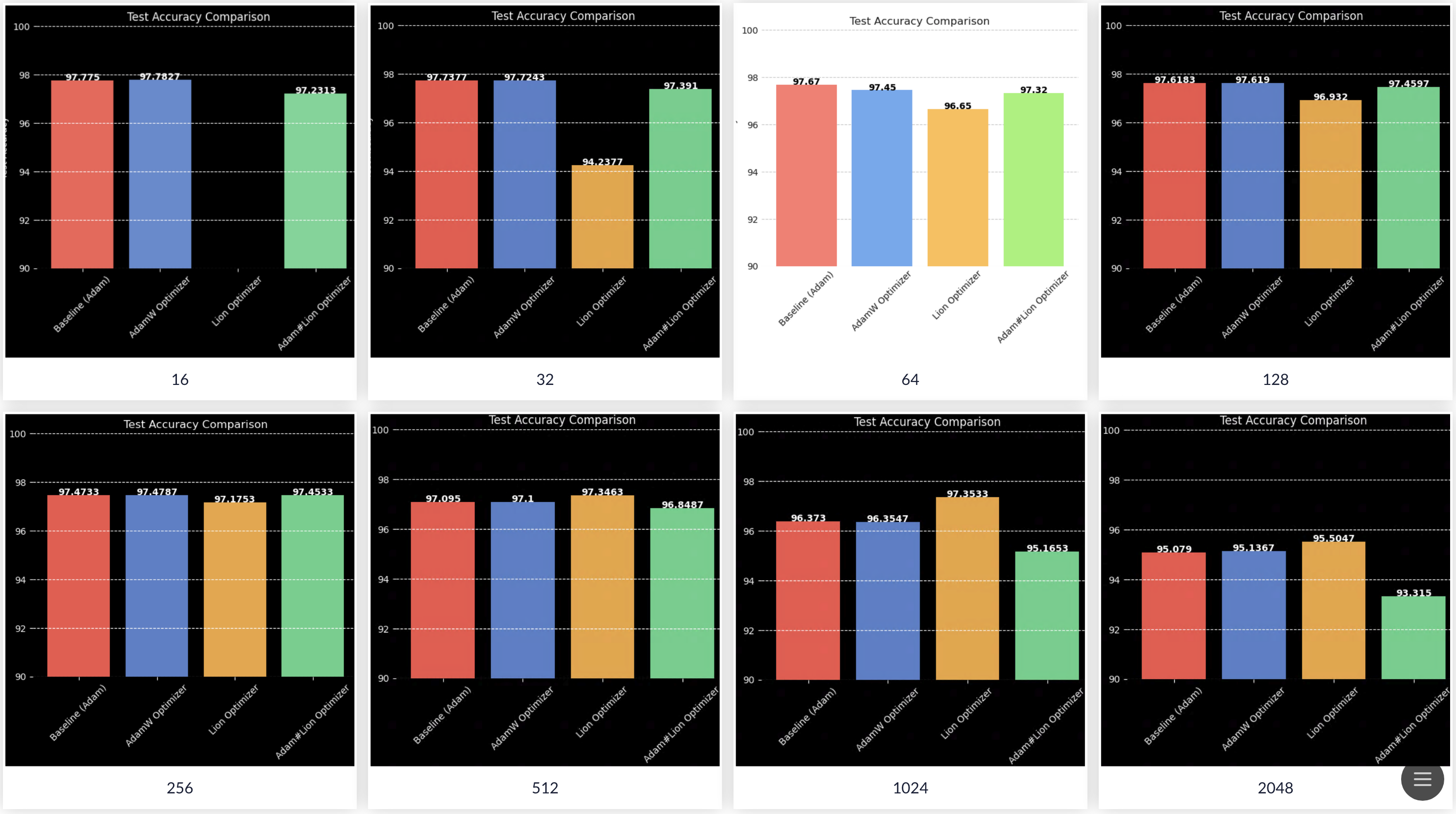

In this paper, I present an alternate approach for benchmarking deep learning optimizers that address the reproducibility crisis by utilizing statistical tests. I compare the performance of a new optimizer against well-established ones and investigate the effects of batch size and model architecture on their performance. The primary objective is to enhance the reporting of numerical results in optimizer benchmarking papers, increasing the reliability and trustworthiness of the findings even in the absence of complete reproducibility.